Cross-Datacenter Replication (XDR) Architecture

The Aerospike Cross-Datacenter Replication (XDR) feature asynchronously replicates cluster data over higher-latency links, typically WANs.

This discussion is a high-level architectural overview of Aerospike XDR.

Terminology

- Aerospike refers to a remote destination as a datacenter. Examples, diagrams, and parameters often use the abbreviation "DC", like DC1, DC2, DC3, and so on.

- The process of sending data to a remote datacenter is known as shipping.

Configuring XDR

For specific details on how to configure XDR, including sample parameters for the features discussed in this overview, see Configure Cross-Datacenter XDR.

Replication granularity: namespace, set, or bin

Replication is defined per datacenter. You can also configure the granularity of the data to be shipped:

- A single namespace or multiple namespaces.

- All sets or only specific record sets.

- All bins or a subset of bins.

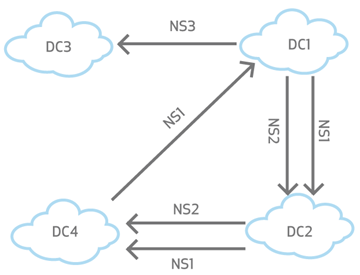

Shipping namespaces

Aerospike nodes can have multiple namespaces. You can configure different namespaces to ship to different remote clusters. In this illustration, DC1 is shipping namespaces NS1 and NS2 to DC2, and shipping namespace NS3 to DC3. Use this flexibility to configure different replication rules for different data sets.

Shipping specific sets

You can configure XDR to ship certain sets to a datacenter. The combination of namespace and set determines whether to ship a record. Use sets if not all data in a namespace in a local cluster needs to be replicated in other clusters.

Shipping bins

You can configure XDR to ship only certain bins and ignore others. By default all bins are shipped. You can use bin-policy to decide which bins will get shipped.

Shipping record deletes

In addition, you can configure XDR to ship record deletions. Such deletes can be either those from a client or those that result from expiration or eviction by the namespace supervisor (nsup).

- By default, record deletes by clients are shipped (durable deletes are always shipped).

- By default, record deletes from expiration or eviction by nsup are not shipped.

Compression disabled by default

By default, XDR does not compress shipment data. To save bandwidth, if necessary, you can enable compression.

General mechanism of XDR: comparison of LUT and LST

- As one of its basic functions, the Aerospike Server Daemon (ASD, the executable

asd) keeps track of a record's digest and Last Update Time (LUT) based on write transactions. - The XDR component of ASD tracks a record's partition's Last Ship Time (LST). Any record in a partition whose Last Update Time (LUT) is greater than the partition's LST is a candidate for shipping. The LST is persisted by partition.

- The XDR component of ASD compares the record's LUT to the record's partition's LST. If the LUT is greater than the LST, the record is shipped to the defined remote nodes' corresponding namespaces and partitions in the defined remote datacenters.

- These replication writes are also tracked in case they are needed to recover from a failed master node.

- If XDR is configured to ship user-initiated deletes or deletes based on expiration or eviction, these write transactions are also shipped to the remote datacenters.

- The record's partition's LST is updated.

- The process repeats.

XDR Topologies

XDR configurable topologies are as follows. XDR configuration examples for implementing the topologies described here are in Example configuration parameters for XDR topologies.

Active-passive topology

In an active-passive topology, clients write to a single cluster. Consider two clusters, A and B. Clients write only to cluster A. The other cluster, cluster B, is a stand-by cluster that can be used for reads. Client writes are shipped from cluster A to cluster B. However, client writes to cluster B are not shipped to cluster A. Additionally, XDR offers a way to completely disable client writes to cluster B, instead of just not shipping them.

A common use case for active-passive is to offload performance-intensive analysis of data from a main cluster to an analysis cluster.

Mesh topology (active-active)

Multi-Site Clustering and Cross-Datacenter Replication provide two distinct mechanisms for deploying Aerospike in an active-active configuration. More information of the tradeoffs involved in using either model can be found in the article Active-Active Capabilities in Aerospike Database 5.

In active-active mesh topology, clients can write to different clusters. When writes happen to one cluster, they are forwarded to the other. A typical use case for an active-active topology is when client writes to a record are strongly associated with one of the two clusters and the other cluster acts as a hot backup.

If the same record can be simultaneously written to two different clusters, an active-active topology is in general not suitable. Refer to "Bin convergence in mesh topology" for details.

An example for the active-active topology is a company with users spread across a wide geographical area, such as North America. Traffic could then be divided between the West and East Coast data-centers. While a West Coast user can write to the East Coast datacenter, it is unlikely that writes from this user will occur simultaneously in both datacenters.

Bin convergence in mesh topology

Aerospike version 5.4 introduced the bin convergence feature which can help with write conflicts in mesh/active-active topologies. This feature makes sure that the data is eventually the same in all the DCs at the end of replication even if there are simultaneous updates to the same record in multiple DCs. To achieve this, extra information about each bin's last-update-time (LUT) is stored and shipped to the destination clusters. A bin with a higher timestamp (LUT) is allowed to overwrite a bin with lower timestamp (LUT). An XDR write operation succeeds when at least one bin update succeeds, and returns the same message for both full and partial successes.

One important thing to note is that this feature will ensure only convergence and not eventual consistency. Some of the intermediate updates may be lost. This feature will be able to cater to the use cases which care more about the final state rather than the intermediate states. For example, if the application is tracking the last-known location of a device, maybe by multiple trackers, this feature is a good fit.

If the intermediate updates are important, this feature is not the right choice. For example, in a digital wallet application, all the intermediate updates are very important. One should consider multi-site clustering which will not allow the conflicts to happen in the first place.

For more information, see "Bin Convergence".

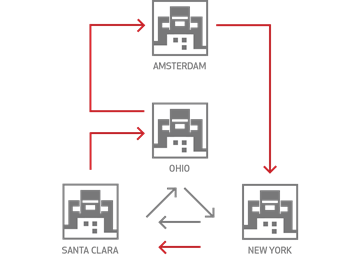

Star topology

Star topology allows one datacenter to simultaneously replicate data to multiple datacenters. To enable XDR to ship data to multiple destination clusters, specify multiple destination clusters in the XDR configuration. Star replication topology is most commonly used when data is centrally published and replicated in multiple locations for low-latency read access from local systems.

Linear chain topology

Aerospike supports the linear chain network topology, sometimes called a "linear daisy chain". In a linear chain topology, one datacenter ships to another, which ships to another, which ships to another, and so on, until reaching a final datacenter that does not ship to any other.

If you are using a chain topology, be sure not to form a ring daisy chain. Make sure that the chain is linear: it ends at a single node and does not loop back to the start or any other link of the chain.

Hybrid topologies

Aerospike also supports many hybrid combinations of the above topologies.

Local destination and availability zones

While the most common XDR deployment has local and remote clusters in different datacenters, sometimes the "remote" cluster might be in the same datacenter. Common reasons for this are:

- The local destination cluster is only for data analysis. Configure the local destination cluster for passive mode and run all analysis jobs on that cluster. This isolates the local cluster from the workload and ensures availability.

- Multiple availability zone datacenters, such as with Amazon EC2, can ensure that if there is a large-scale problem with one availability zone, the other is up. Administrators can configure clusters in multiple availability zones in a datacenter. For best performance, all nodes in a cluster must belong to the same availability zone.

Cluster heterogeneity and redundancy

XDR works for clusters of different size, operating system, storage media, and so on. The XDR failure handling capability allows the source cluster to change size dynamically. It also works when multiple-destination datacenters frequently go up and down.

There is no one-to-one correspondence between nodes of the local cluster and nodes of the remote cluster. Even if both have the same number of nodes, partitions can be distributed differently across nodes in the remote cluster than they are in the local cluster. Every master node of the local cluster can write to any remote cluster node. Just like any other client, XDR writes a record to the remote master node for that record.

Failure handling

XDR manages the following failures:

- Local node failure.

- Remote link failure.

- Combinations of the above.

In addition to cluster node failures, XDR gracefully handles failures of a network link or of a remote cluster. First, replication via the failed network link or to the failed remote cluster is suspended. Then, once the issue has been resolved and things are back to normal, XDR resumes for the previously unavailable remote cluster and catches up. Replication via other functioning links to other functioning remote clusters remains unaffected at all times.

Local node failure

XDR offers the same redundancy as a single Aerospike cluster. The master for any partition is responsible for replicating writes for that partition. When the master fails and a replica is promoted to master, the new master takes over where the failed master left off.

Communications failure

If the connection between the local and the remote cluster drops, each master node records the point in time when the link went down and shipping is suspended for the affected remote datacenter. When the link becomes available again, shipping resumes in two ways:

- New client writes are shipped just as they were before the link failure.

- Client writes that happened during the link failure, that is, client writes that were held back while shipping was suspended for the affected remote cluster, are shipped.

When XDR is configured with star topology, a cluster can simultaneously ship to multiple datacenters. If one or more datacenter link drops, XDR continues to ship to the remaining available datacenters.

XDR can also handle more complex scenarios, such as local node failures combined with remote link failures.

Combination failure

XDR also seamlessly manages combination failures such as local node down with a remote link failure, link failure when XDR is shipping historical data, and so on.