Rate Limiting Spark Users

Aerospike has rate limiting controls - which are not built in to Spark - to prevent Spark jobs from using excessive Aerospike database resources that can cause severe operational problems.

Bear in mind that rate-limiting functionality is a shared responsibility (described in the shared responsibility section) between the Aerospike administrator and the Spark application developers.

Requirements

Rate limiting is only supported in:

- Aerospike database 5.6 and later

- Spark connector 3.2.0 and later

Set Rate Quotas in the Aerospike Server

Aerospike rate quotas, expressed as records per second, are a rate limiter or circuit breaker which applies to read and write operations in the Aerospike server.

Rate quotas apply to an Aerospike role by default. However, you can map a quota to a specific user as shown in the next example. That user’s credentials are then propagated to the Aerospike database for quota monitoring.

Setting the rate quota prevents a Spark job from exceeding the predetermined rate. This avoids operational issues with the Aerospike server. If multiple Spark jobs are running, the rate quota applies to the total of all of the jobs, not to each job individually.

The Aerospike server - not the Spark connector - monitors usage and accounting. Users are still responsible for managing the total consumption across all their Spark jobs. You can do this by splitting the allocated quota among the Spark jobs, then using the rate limiter in the Spark application (described later).

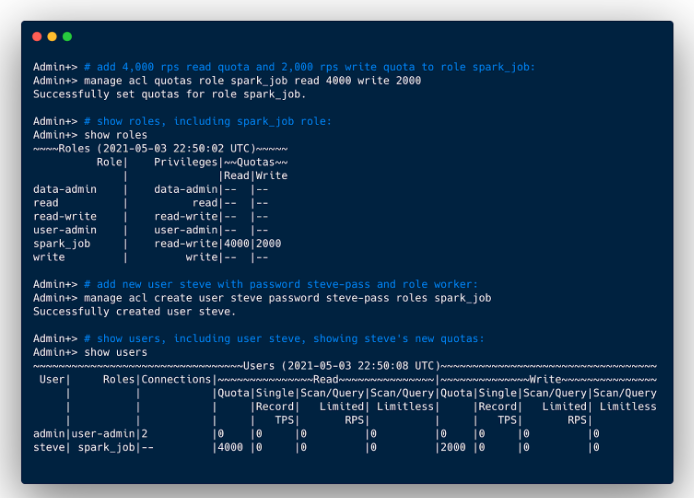

See here for more details on configuring the rate quota. Here’s an example to limit the Spark job submitted by Steve to 4000 rps read quota and 2000 wps write quota.

If a Spark job exceeds (breaches) the quota, it fails. This prevents any single user from consuming more Aerospike resources than have been allocated to them.

The quota breach is noted in the Spark connector logs as seen here.

Error 83,1,0,86400000,86400000,2,BB921BAA9934006 172.31.8.230 3000: Quota exceeded

at asdbjavaclientshadecommand.MultiCommand.parseGroup(MultiCommand.java:248)

at asdbjavaclientshadecommand.MultiCommand.parseResult(MultiCommand.java:225)

The Spark connector does not currently have a retry mechanism. If your application cannot tolerate a failed Spark job, you should either work with the Aerospike administrator to bump up the quota, or try to avoid being rate-limited. If you prefer the latter, consider using the rate limiter in the Spark application (via the Spark Connector).

Set Rate Quotas in the Spark Application

One way to avoid hitting the quota limit is to use the rate limiter in the Spark application for your read or write transactions. This way, you can control your usage and throttle your application’s read or write throughput via the connector without worrying about hitting the quota limit on the Aerospike server.

Throttle Read Throughput

Configure the connector properties aerospike.transaction.rate and aerospike.recordspersecond to activate the rate limiter for reads. These properties are described in detail in the Performance section of the Spark connector configuration page.

Depending on your query, aerospike.recordspersecond controls the scan performance and aerospike.transaction.rate controls the batch reads and writes performance. The basic idea is to create headroom between the allocated quota and the read throughput so that the quota is not breached.

A Spark read operation can be completed in one of two ways: Batch Read or Scan Read. To throttle the read throughput, factor in the number of Aerospike database nodes, Spark partitions, and number of cores in the Spark cluster. The following computations assume there is one Spark core available per Spark partition, and are theoretical ranges.

Assuming there are N nodes in Aerospike cluster and S is the number of Spark partitions (can be computed by 2aerospike.partition.factor). To not cross the quota limit set at each Aerospike node, the amount of cumulative read and write request by all Spark partitions should be less than the read and write quota set at the Aerospike nodes. The following computations provide a theoretical range to avoid quota error. To avoid error safely, users can safely use lower bounds to set different parameters.

Lower bound assumes all Spark partitions are trying to read from the same Aerospike node, while upper bound assumes Spark partitions batchget requests are distributed to N/S nodes.

Where r is the aerospike.recordspersecond as set in Spark connector configuration. This value can’t be zero if quota is set in the Aerospike DB.

Check with your Aerospike administrator if you're not sure how many Aerospike database nodes are in the Aerospike cluster.

Throttle Write Throughput

Configure the Aerospike property aerospike.transaction.rate to activate the rate limiter for writes. This property is described in detail in the Performance section of the Spark connector configuration page.

If your DataFrame consists of 200 partitions, the following code snippet writes about 200*9 = 1,800 records per second into the ASDB cluster. This is sufficiently below the write quota of 2,000, which was allocated earlier to your Spark job.

df.write

.format("aerospike")

.option("aerospike.updateByKey", "reviewerID")

.option("aerospike.set", streamingWriteSet)

.option(“aerospike.transaction.rate”, 9)

.mode(SaveMode.Append)

.save()

If you are unsure about the number of partitions in your DataFrame, consider calling getNumPartitions() on the DataFrame's underlying RDD. (For example, df.rdd.getNumPartitions().) In the case of Scala, this is a parameterless method: df.rdd.getNumPartitions.

Verify Successful Throttling

Use an Aerospike data browsing tool to verify that your Spark job has successfully written to and/or read from the database, while honoring the server rate quotas.

Shared Responsibility

The Aerospike administrator is responsible for managing the load on the Aerospike cluster.

This responsibility includes understanding the capacity needs of the Spark applications and accurately reflecting these needs in the quotas being set for the users. The administrator must also communicate quotas to the users so that there is no disruption of service.

Aerospike administrators should not assign the same role to multiple Spark users, or map multiple Spark users to the same Aerospike user. This can create manageability issues.

The Spark application developer is responsible for handling the allocated server quota.

This responsibility includes either failing upon quota breach, or using the connector rate limiting feature in the Spark application to prevent the quota from being breached.

If the allocated quotas are insufficient, we recommend Spark application developers work with the Aerospike administrator to adjust the quotas.