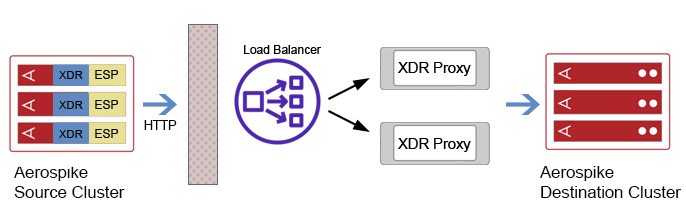

XDR Proxy Record Ordering Architecture

The following is the recommended architecture for achieving record ordering when using XDR proxy behind a load balancer, using the ESP outbound connector.

We recommended using a separate ESP connector, as the XDR destination for every Aerospike source server instance, running on the same host as shown in the architecture diagram.

For Kubernetes deployments, the ESP connector can be run as a sidecar to the main Aerospike server container. For non-containerized deployments, the ESP connector can be run as a separate service on the same host as the Aerospike server instance.

XDR is then configured with a data center, that ships to the local ESP container, like so

xdr {

dc esp-proxy-1 {

connector true

node-address-port localhost 8901

namespace sessions {

}

}

}

The ESP connector is configured to maintain order, for different versions of the same Aerospike record, using the record-ordering configuration.

The ESP connector then is configured to ship XDR change notifications to the remote cluster, via an XDR Proxy cluster (maybe behind a load balancer) running in HTTP mode. The XDR Proxy cluster then ships the change notifications to the Aerospike destination cluster.

An L7 load balancer automatically redistributes the HTTP load when the XDR Proxy cluster is scaled. If the load balancer is a L4 load balancer, the ESP connector should be configured with connection TTL, to redistribute the load when the XDR Proxy cluster is scaled.

This architecture prevents out-of-order delivery of different versions of the same record to the destination cluster, which can arise due to network recording or reordering at the intermediate components like the XDR proxy.

This architecture prevents out-of-order delivery of different versions of the same record to the destination cluster, which can arise due to network reordering at the intermediate components.

This is achieved by

- the ESP connector ensuring that at any time a single version of the same record (identified by

namespace and digest) is

in flight. - the number of Aerospike server instances are matched up with the ESP connector instances in a 1:1 manner. This prevents two different components from handling different versions of a record (identified by namespace and digest) simultaneously.

Caveat

Different versions of the same record can be delivered to the destination out-of-order when the source cluster composition changes. This is possible when the Aerospike cluster partition ownerships change while data is rebalancing.